Tacit API

Do you already have a way to gather voice and video data from your users?

Existing recordings can be passed directly to our AI models via our Tacit API.

This is a back-end-only solution to embed Tacit into your platform - allowing you complete control of the look and feel of the user experience and journey from start to finish.

Output: emotional, stress, and cognitive states

Using our research platform, you can precisely identify and measure affective states with millisecond accuracy. You can express the evoked emotions using scientific models such as Arousal-Valence scores, Plutchik's Wheel of Emotions, or the General Adaptation Syndrome (GAS) model. Additionally, you can calculate the cognitive workload required from your respondents with ease.

Lite Version:

ONLY AUDIO AND VIDEO

Remote analysis done at scale and in an affordable way.

This version analyses the respondents’ emotional reactions, body and hand movements of past sessions and correlates that with objects detected within the browsing session itself. This version is fully remote and analyses consumers’ behaviour at scale.

The lite version uses:

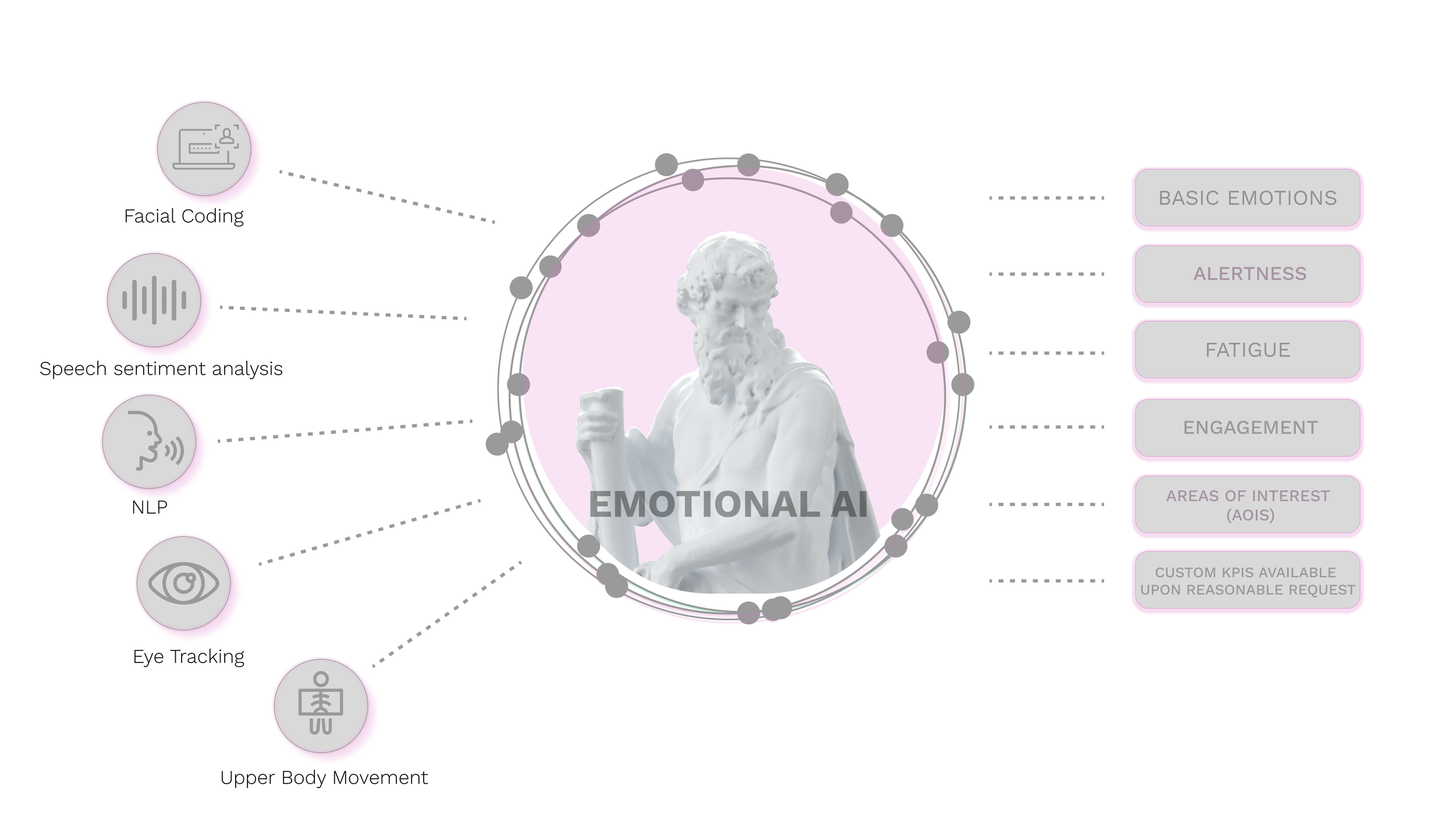

Facial coding: analysing the micro expressions on consumers’ face

Speech sentiment analysis: analysing what consumers’ say and the tone and pitch of their voice

Heart rate and respiration rate (beta): analysing the RGB signal to get HR and respiration rate estimates and baseline changes

Posture and body movement: analysing consumers’ posture and movements during screen time

Hand movements: analysing movements of hands, if and when visible on the stream

Object detection: analyzing what objects appear on the screen, in order to detect correlation.

PRO Version:

Pro Version: Granular biometrics through WearablesProviding detailed personalised analysis by harvesting EEG, ECG, fine movement and other biometric data during digital browsing sessions

This version includes a mini lab testing environment, enabling detailed monitoring of consumers’ behaviour using sleek wearable devices connected to a mobile app, with data analysed and visualised in an online dashboard. The lab is mobile, and provides real time data pre-processing and analysis.

Each data source will be logged with a timestamp, enabling millisecond precision and correlating with what computer vision will detect on the screen.

The pro version includes both raw and analysed data available on the dashboard

The Pro version uses:

EEG signals: using a sleek wearable, we will be collecting EEG data with millisecond accuracy and using EEG for neuro-specific metrics

ECG signals: using cardio data, IBI and HRV to detect emotional and physiological states based on ECG data

Fine movement: using accelerometers and gyroscopes to detect and map out fine body movement

Psychometrics: psychological profiling of each consumer based on simple standardised questionnaires.